AI tools, from writing emails to crunching data, have become indispensable in the workplace. Yet, a new study from Kaspersky reveals that in the Middle East, Turkiye, and Africa (META) region, most employees are operating in the dark.

The research, “Cybersecurity in the workplace: Employee knowledge and behavior,” highlights a dangerous reality. AI tools are largely being adopted as ‘shadow IT’ used without corporate guidance, risking massive data leaks and prompt injections.

The findings underscore a widespread adoption of AI tools across META (including South Africa, Kenya, and Egypt), coupled with a severe lack of preparation for the associated risks:

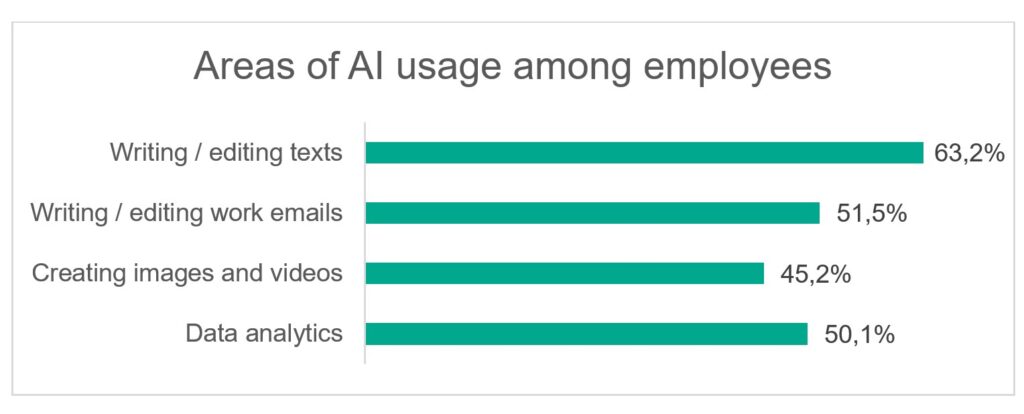

- Ubiquitous Use: A staggering 81% of professionals surveyed in the META region use AI tools for work tasks. The primary uses are writing/editing texts (63.2%), crafting work emails (51.5%), and data analytics (50.1%).

- The Training Deficit: While 94.5% of respondents understand what generative AI is, only 38% have received training focused on the crucial cybersecurity aspects of using neural networks. A full 33% reported receiving no AI-related training at all.

This means that millions of pieces of sensitive corporate data are likely being fed into public AI models by employees who are unaware of the security implications. Even where AI tools are formally permitted (72.4% of workplaces), the lack of security guidance creates massive exposure.

According to Chris Norton, General Manager for Sub-Saharan Africa at Kaspersky, the solution is not an outright ban, but a “tiered access model” backed by comprehensive training.

A balanced approach allows for innovation while upholding security standards. Kaspersky recommends that organizations urgently transition from Shadow AI to Managed AI by taking the following steps:

- Develop a Formal Policy: Implement a company-wide policy that clearly defines which AI tools are permitted, specifies data usage restrictions, and prohibits AI use in sensitive functions.

- Invest in Specialized Training: Go beyond basic “how-to” guides. Train employees on responsible AI usage and provide IT specialists with knowledge on exploitation techniques and practical defense strategies, such as Large Language Models Security training.

- Monitor and Adapt: Conduct regular surveys to track AI usage frequency and purpose. Use a specialized AI proxy to clean queries on-the-fly, removing sensitive data before it hits the public model.

By establishing clear policy and security measures, organizations can ensure that the automation and efficiency gains offered by AI are not eclipsed by catastrophic security breaches.